We can do that by solving a problem that has to perform an exhaustive search.

Dfs java stack full#

To be sure that our implementation always returns a solution if one exists, we have to test that our search explores the full search space. Since there are many combinations of coloring possible, we would not have noticed if we only explored a subset of all search nodes in the search tree. We also have not sufficient tested, whether our implementation always returns a solution if one exists. Therefore, we need to approach a different problem with a larger search space. The map coloring problem is too small to expect any performance improvements from a parallel solution. ( defn transduce-1 "apply a transducer on a single value" ( let ( f nil x ) ) ) ( defn remove-worker-from ( filterv # ( not= % worker ) worker-pool ) ) given a vector of channels (our async workers are also channels), async/alts!! will return the first element that is ready for consumption and the channel it originates from ( defn next-channel-value ( if ( !! chan root-problem ) ( let ( async/close! chan ) result ) ) ) ) "If multiple threads access a tree set concurrently, and at least one of the threads modifies the set, it must be synchronized externally." In order to enable concurrent write and reads on our priority queue, we implement the Buffer-Protocol of the core.async-library that also adds the ability to back pressure the allocation of resources. We can use a to implement such a generic priority queue, but this class is not synchronized, i.e. For parallel depth-first search, we need a priority queue that prioritizes on depth in the graph instead.

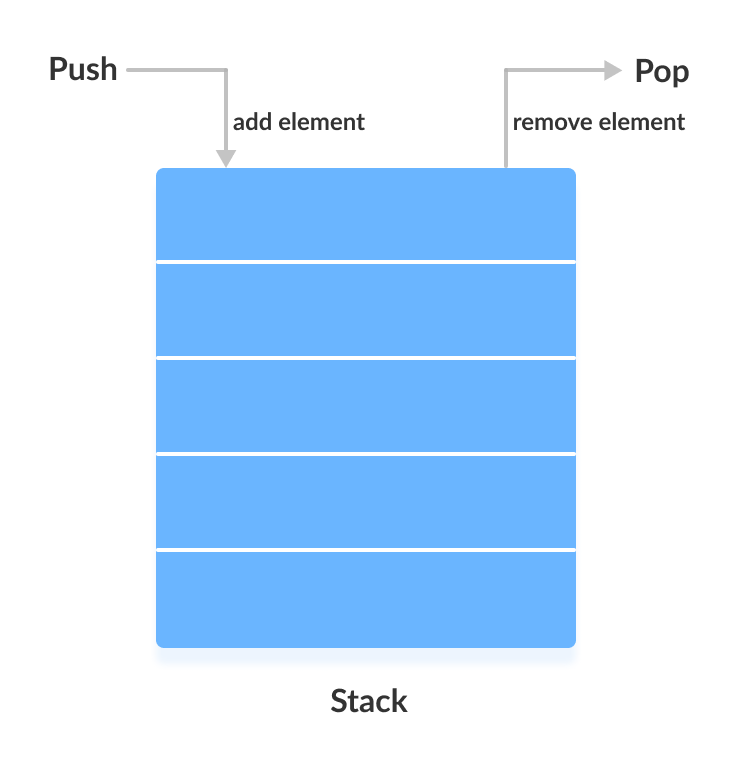

To solve this problem, it is helpful to interpret a stack as a priority queue that prioritizes on time added. If there are multiple parallel workers searching the graph depth-first, the last added item to the stack is no longer guaranteed to be the deepest known node. Since there is only one producer of elements for the stack, it is guaranteed that the last added element of the stack is always the deepest known node. The implementation of sequential depth-first search uses a stack to store the nodes of the graph to traverse. Hence, we try a different approach that makes use of a library called core.async, which is inspired by the paper "Communicating sequential processes" by Tony Hoare, to implement a parallel depth-first search that addresses both of these problems. The algorithm would amass as many tasks as there are states to explore without applying back pressure against the exhaustion of resources, and no prioritization of depth over breadth. The second problem lies in the eagerness of the recursion.

Let us have a look at our sequential backtracking search and understand, why that is the case.

First, the reducers library does not parallelize lazy sequences and second and foremost, the way we construct our lazy sequence does not fit well with a fork-join approach.

Dfs java stack code#

But soon I realized, that the functional backtracking code cannot be parallelized by the reducers library for various reasons.

In Clojure, it is even possible to run certain data transformations in parallel just by using the parallel running versions of common functions like reduce, filter or map of the reducers library. As I wrote the post, I thought it would be trivial to write a parallel depth-first search once that I have a functional solution, because the functional programming style is a natural fit for leveraging the fork-join model. In my previous post Constraint Satisfaction Problems and Functional Backtracking Search I presented a functional solution for solving CSPs with backtracking search, which is a variant of depth-first search. I've been trying to solve this depthFirstSearch Algorithm and while I have followed the pseudocode properly, I've been having severe trouble trying to declare a vertex within the algorithm.Solving Search Problems with Parallel Depth-First Search Introduction

0 kommentar(er)

0 kommentar(er)